Journal article recommender

Introduction

I like to keep up on the the current academic literature, but there are so many papers published each week it’s hard to even find the relevant papers to read. I created a tool so a computer can do the searching for me.

Previously there were two main ways to stay up to date: alerts or RSS feeds. RSS feeds give you all the recent articles from a particular publisher, but you have to sort through them manually to find relevant articles. Alerts are more specific and have the advantage that they automatically notify you when a paper either has a particular keyword or citation. Unfortunately with a small set of keywords you are likely to create an alert that is much too broad (e.g. ‘graphene’) or too specific (‘Dyakonov waves’).

What would really be nice is an alert system that has a more holistic view of what you’re interested in. Something that knows the papers you have been interested in in the past so it can predict new articles you’d like to read. Before I started this project I was pretty excited about the ReadCube citation manager. One of its promised features was personalized recommendations based on your library of read papers. Perfect! …Until I found out that the feature had been “coming soon” for over a year.1

I decided to implement such a personalized recommender myself. It would rank new articles based on how similar they are to articles I have already read.

Model

As a first pass I decided to use bag of words to represent articles and cosine similarity to rank the article “word bags”.

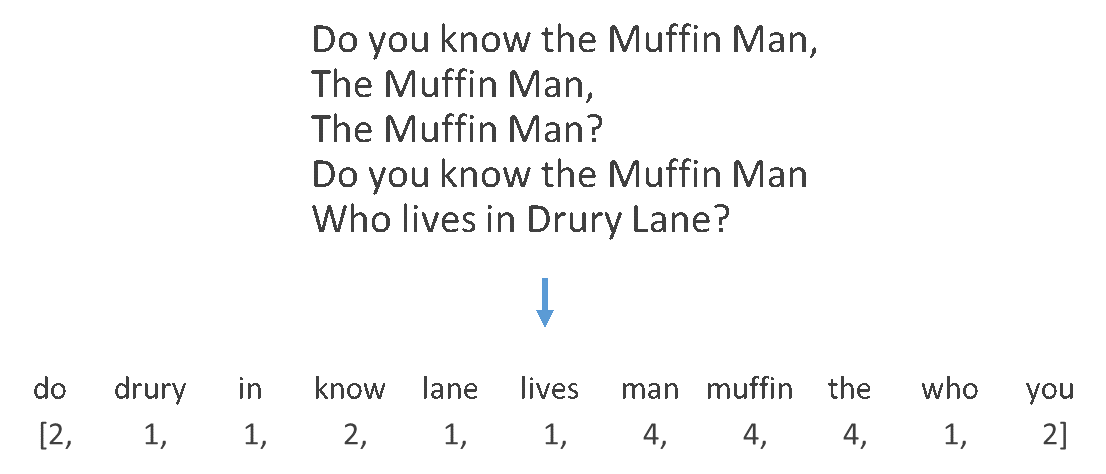

Bag of words takes a text corpus and turns it into a vector of numbers which is much easier to compare than the raw text. In a bag of words vector, each element of the vector corresponds to a word and the value of the element corresponds to the number of times that the word appears in the corpus.

Since only the number of times each word appears is counted, but not the position of the word nor its relationship to other words, you basically represent a text as just a grab bag of words. Because of this it’s simple, fast, easy to implement, and easy to understand. But it can be overly simple since it ignores the structure that the words are in and multiple variations of the same word (‘aluminum’ and ‘aluminium’) are treated as different words.

In practice vectors need to have a finite number of dimensions so we choose to only have the n most common words in the training corpus make up our vectors (in my case n = 1000). Importantly only these words will be counted in new articles so we have a fixed basis.

Now I’d like to rank new articles based on their similarity to my library. By comparing the bag of words vector of a new article to the average vector of my library, we can see how similar new articles are to the prototypical article I would read. I use cosine similarity to make this quantitative. Cosine similarity compares how similar the two vectors are by finding the cosine of the angle between them (\(\cos{\theta} = \frac{U \cdot V}{|U| |V|}\)). If two vectors are pointing in the same direction, the angle between them will be small and the cosine of that angle will be near 1. With the single number score from cosine similarity I can directly rank how close new articles are to my library.

Implementation

To implement the article recommender I chose to go with a modular design where loading the library to train against, training and scoring, and loading new articles to rank would each be separate classes. This allows me to add new components in the future, such as getting new articles from Google Scholar or loading a BibTeX library, without having to rewrite the core scoring algorithms. I’ve simplified some of the code for readability, but the general flow is as follows:

- Get training articles: First get article titles and abstracts from my library of read articles. I use the open source reference manager Zotero to store my articles. Exporting this as a csv file is the quickest way to get started.

- Preprocess: Strip anything besides letters and spacing using the

regular expressions

library, make all text lowercase, and remove common stop words (‘the’,

‘and’, ‘a’, etc.) using the Natural Language

Toolkit.

import re from nltk.corpus import stopwords def row_to_words(row): ''' Converts a raw library entry to a string of words Input: A single row from the library Output: A single processed string ''' # Merge columns text = row.fillna("") text = "".join(text) # Remove non-letters letters_only = re.sub("[^a-zA-z]"," ", text) # Convert to lower case, split into individual words words = letters_only.lower().split() # load stop words (searching a set to check if it includes # an element is much faster than searching a list) stops = set(stopwords.words("english")) # Remove stop words meaningful_words = [w for w in words if not w in stops] # Join the words back into one string separated by a space # and return the result return(" ".join(meaningful_words)) - Vectorize: Create a bag of words vectorizer based on my library

using scikit-learn’s CountVectorizer

and find the average word vector to compare new articles against.

from sklearn.feature_extraction.text import CountVectorizer count_vectorizer = CountVectorizer(analyzer = "word", tokenizer = None, preprocessor = None, stop_words = None, max_features = 1000) def train_vectorizer(count_vectorizer, cleaned_library) features = count_vectorizer.fit_transform(cleaned_library) features = features.toarray() ave_vec = features.sum(axis=0) ave_vec = ave_vec/np.linalg.norm(ave_vec) # Normalize return ave_vec - Get new articles: Pull journal RSS feeds with recently published

articles from the feed aggregator Feedly.

The python-feedly

library provides wrappers to streamline common operations.

from feedly import client as feedly with open('inputs/feedly_client_token.txt', 'r') as f: FEEDLY_CLIENT_TOKEN = f.readline() client = feedly.FeedlyClient(token = FEEDLY_CLIENT_TOKEN, sandbox = False) streams = {} def load_stream(feed, num_articles=500): ''' Loads unread articles in a feedly stream. Optional parameter num_articles sets how many articles to load. ''' stream = client.get_feed_content( access_token=FEEDLY_CLIENT_TOKEN, streamId=feed[u'id'], unreadOnly='true', count=num_articles) return stream - Preprocess new articles: Extract title and abstracts from RSS

HTML using BeautifulSoup.

Clean and vectorize the text the same way as the training samples (function omitted).

from bs4 import BeautifulSoup def get_content(stream): ''' Get the title and abstract of items in an rss stream ''' content_list = [] for item in stream[u'items']: title = item[u'title'] title_text = remove_html(title) try: abstract = item[u'content'][u'content'] abstract_text = remove_html(abstract) # The article may not contain a content field except KeyError: article_text = "" # The clean function is nearly identical to row_to_words article_text = (clean(title_text) + " " + clean(abstract_text)) content_list.append(article_text) return content_list def remove_html(rss_field): ''' Removes the HTML tags from specific rss fields ''' # Get the longest paragraph tag which usually removes the # journal name, doi, or author list soup = BeautifulSoup(rss_field) longest_p = max(soup.find_all("p"), key=lambda tag: len(unicode(tag))) # Remove HTML field_text = longest_p.get_text() return field_text - Rank: Compare the new articles to the average training article

vector using cosine similarity.

def score_article(ave_vec, article, vectorizer): ''' Scores an article string by cosine similarity to average library vector. It adds an additional 1 in the normalizing denominator to prevent divide by zero for empty article strings. Returns a numpy array with the article score as a single element. ''' article_vec = vectorizer.transform([article]) article_vec = article_vec.toarray() score = (np.dot(article_vec, ave_vec)/ (np.linalg.norm(article_vec)+1)) return score

import pandas as pd

full_library = pd.read_csv(library_csv)

library = full_library[['Title','Abstract Note']]

library.rename(columns={'Abstract Note': 'Abstract'},

inplace=True)Outcome

This program quickly ranks a week’s worth (500-600 papers) of new papers where about 7 of the top 10 ranked papers are relevant and nearly all papers relevant to my research in are in the top 30. This ends up saving roughly 30-45 minutes of manual sifting per week and allows me to look for articles in more niche journals.

| Title | Url | Score |

|---|---|---|

| Coupling between diffusion and orientation of pentacene molecules ... | http://feeds.nature.com/~r/nmat/rss/aop/~3/5WDMrZ2nT_4/nmat4575 | 0.313582 |

| Chiral atomically thin films | http://feeds.nature.com/~r/nnano/rss/aop/~3/QsHzpeSSRpg/nnano.2016.3 | 0.294491 |

| Controlling spin relaxation with a cavity | http://feeds.nature.com/~r/nature/rss/current/~3/ciM-agFY1BI/natur... | 0.294426 |

| Direct measurement of exciton valley coherence in monolayer WSe2 | http://feeds.nature.com/~r/nphys/rss/aop/~3/y1wbRV0DAl8/nphys3674 | 0.274917 |

| Electrostatic catalysis of a Diels–Alder reaction | http://feeds.nature.com/~r/nature/rss/current/~3/9EIXjtxOevw/natur... | 0.247099 |

| Multi-wave coherent control of a solid-state single emitter | http://feeds.nature.com/~r/nphoton/rss/current/~3/fM48f_mmHSc/npho... | 0.247000 |

| Electro-optic sampling of near-infrared waveforms | http://feeds.nature.com/~r/nphoton/rss/current/~3/ih2CR8lkn54/npho... | 0.240009 |

| Self-homodyne measurement of a dynamic Mollow triplet in the solid... | http://feeds.nature.com/~r/nphoton/rss/current/~3/FL8iMhrGP-g/npho... | 0.234039 |

| Chiral magnetic effect in ZrTe5 | http://feeds.nature.com/~r/nphys/rss/aop/~3/9TMnsh32Hi8/nphys3648 | 0.230376 |

| Condensation on slippery asymmetric bumps | http://feeds.nature.com/~r/nature/rss/current/~3/Ukr2itGNCX0/natur... | 0.229368 |

| Realization of a tunable artificial atom at a supercritically char... | http://feeds.nature.com/~r/nphys/rss/aop/~3/kBJieWtqays/nphys3665 | 0.228723 |

| Experimental realization of two-dimensional synthetic spin–orbit c... | http://feeds.nature.com/~r/nphys/rss/aop/~3/e9bpO5vT08Y/nphys3672 | 0.222773 |

| Collective magnetic response of CeO2 nanoparticles | http://feeds.nature.com/~r/nphys/rss/aop/~3/Hob8ZdJ5bh4/nphys3676 | 0.221313 |

| Observation of room-temperature magnetic skyrmions and their curre... | http://feeds.nature.com/~r/nmat/rss/aop/~3/cpzy9V09J0M/nmat4593 | 0.210910 |

| Coherent control with a short-wavelength free-electron laser | http://feeds.nature.com/~r/nphoton/rss/current/~3/H4xCuMGuyZM/npho... | 0.206225 |